In distributed scenarios many "non-critical" items such as number of likes, retweets etc will be under eventual consistency, meaning that due to latency between geographically distributed replicas, a different value could be returned from one replica than another, if it hasn't quite 'caught up' with the latest writes.

There are no guarantees that the data will be read in order, or up to any particular point -- only that it will "eventually" converge across all the replicas. This is the weakest consistency level that can be set in Cosmos DB, for example.

[Conceptually there could be a "never guaranteed to make it to all the replicas" consistency level, or perhaps inconsistency, but I don't think that would have much utility... although I'm sure these systems are out there, having been implemented by well-intentioned people reinventing the wheel and somehow ending up in a state where updates can be dropped. Presumably by accident!]

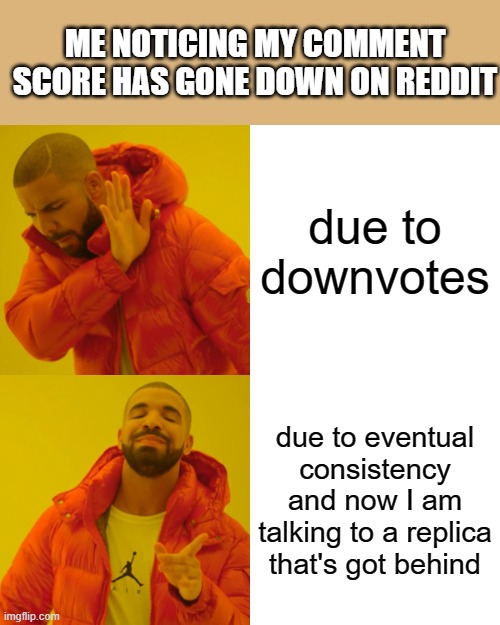

This is why on sites like Reddit, a comment can seem to jump between e.g. 150, 145, 152, 147... upvotes when refreshing the page.

Of course, it could be due to downvotes as well!